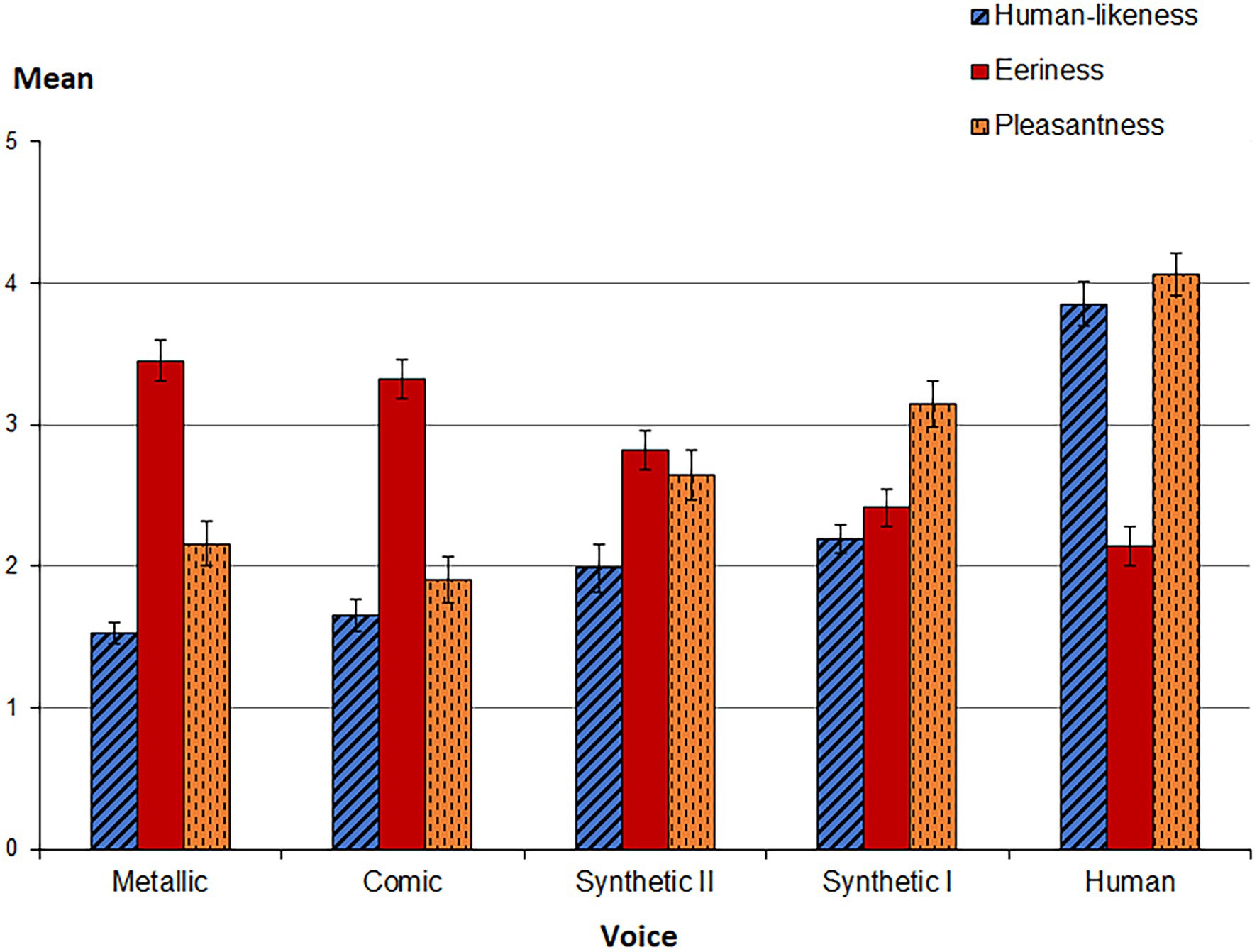

Frontiers | Robot Voices in Daily Life: Vocal Human-Likeness and Application Context as Determinants of User Acceptance | Psychology

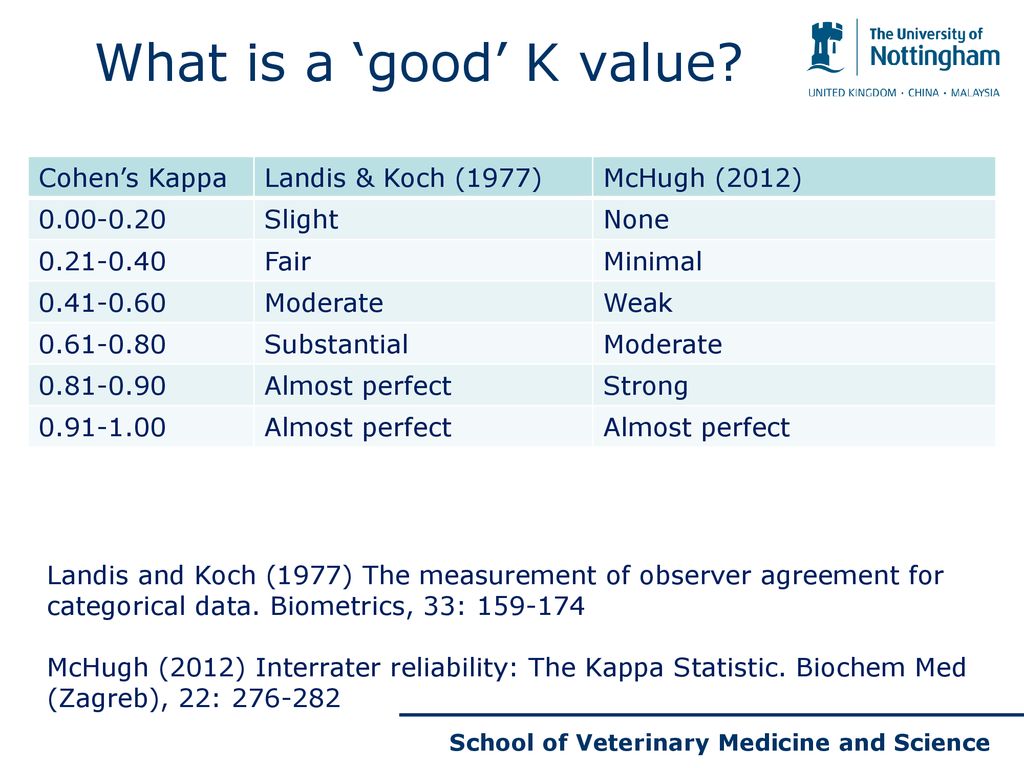

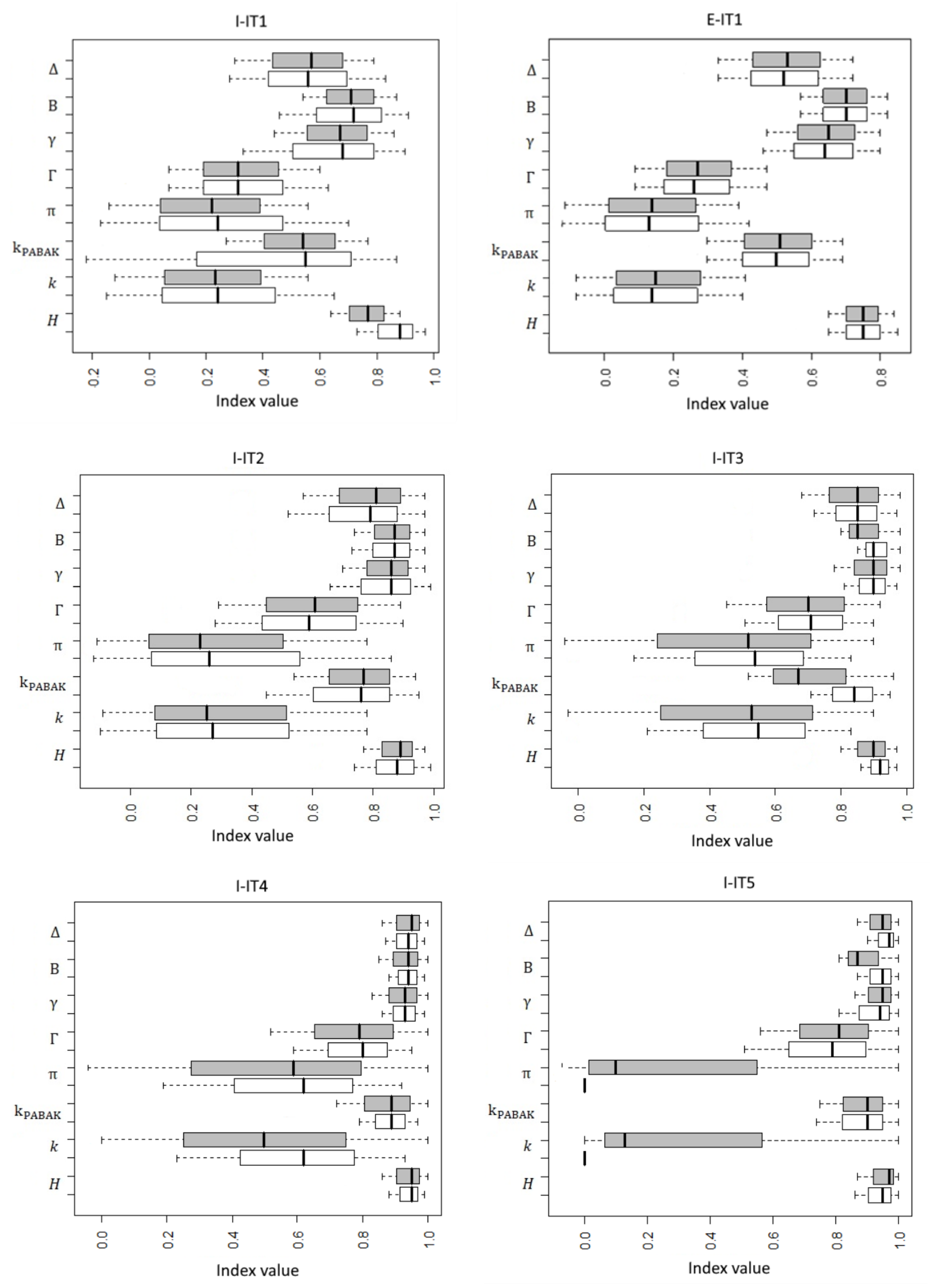

Animals | Free Full-Text | Evaluation of Inter-Observer Reliability of Animal Welfare Indicators: Which Is the Best Index to Use? | HTML

Powerful Exact Unconditional Tests for Agreement between Two Raters with Binary Endpoints | PLOS ONE

B.1 The R Software. R FUNCTIONS IN SCRIPT FILE agree.coeff2.r If your analysis is limited to two raters, then you may organize y

The Measurement of Interrater Agreement". In: Statistical Methods for Rates and Proportions (Third Edition)

An Application of Hierarchical Kappa-type Statistics in the Assessment of Majority Agreement among Multiple Observers